In the “Hello World” phase of Generative AI, we built single agents with simple while loops: Think -> Act -> Observe.

In production, this architecture collapses. Single agents struggle with context pollution, lack of specialization, and error recovery. The industry is shifting toward Multi-Agent Systems (MAS), where the primary engineering challenge isn’t prompt engineering, but Orchestration managing the state, message passing, and control flow between autonomous compute units.

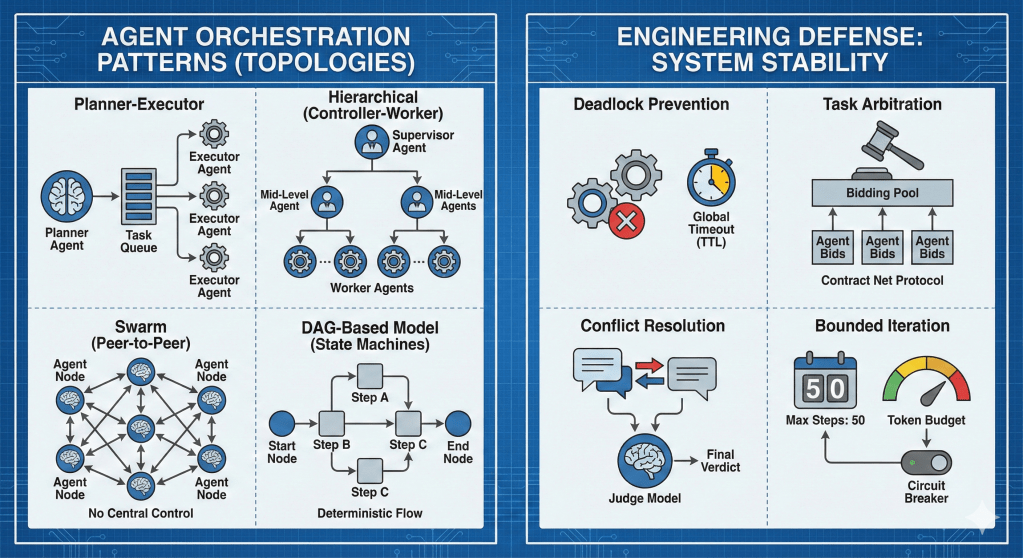

This guide breaks down the four dominant orchestration patterns and, more importantly, how to prevent them from deadlocking or diverging in production.

1. The Planner-Executor Pattern

Topology: Hub-and-Spoke (Dynamic)

This pattern decouples reasoning from execution. A “Planner” agent (usually a high-reasoning model like GPT-4o or Claude 3.5 Sonnet) analyzes a user request and generates a manifest of tasks. These tasks are then pushed to a queue for “Executor” agents (often smaller, faster models or non-LLM scripts) to process.

- When to use: Non-deterministic workflows where the steps aren’t known until runtime (e.g., “Research this company and generate a SWOT analysis”).

- The Bottleneck: The Planner is a single point of failure. If the plan is flawed, the executors waste compute on useless tasks.

- Engineering Note: Implement Plan Repair. The Planner must not just fire-and-forget; it must subscribe to the Executor’s output stream and dynamically re-write the remaining steps of the plan if an early step fails.

2. The Hierarchical Pattern (Controller-Worker)

Topology: Tree Structure

Strict encapsulation of scope. A “Supervisor” agent routes tasks to specific “Sub-agents,” each with their own distinct tools and system prompts. The Sub-agents report back only to their direct Supervisor.

- When to use: Complex enterprise processes (e.g., Software Development: Product Manager $\rightarrow$ Tech Lead $\rightarrow$ Dev/QA/Design).

- The Advantage: Context Sanitization. The “Dev” agent doesn’t need to know the entire conversation history of the “Product Manager,” only the specific ticket it needs to code. This reduces context window costs and hallucination rates.

- Deadlock Risk: Recursive delegation. If Agent A delegates to B, and B delegates back to A, you enter a stack overflow state.

3. The Swarm Pattern (Peer-to-Peer)

Topology: Mesh / Graph

Agents act as independent nodes that can “hand off” tasks to any other agent based on availability or capability, without a central supervisor. OpenAI’s Swarm framework popularized this “handoff” function concept.

- When to use: Open-ended exploration, creative brainstorming, or systems where agents are highly specialized but equal in hierarchy.

- The Risk: Message Thrashing. Without a supervisor to say “stop,” agents can endlessly pass the “hot potato” back and forth, burning tokens without converging on a solution.

4. The DAG-Based Model (State Machines)

Topology: Directed Acyclic Graph

The most robust “engineering” pattern. You do not let the LLM decide the control flow. Instead, you hard-code a Directed Acyclic Graph (DAG) where nodes are agents and edges are deterministic transition rules.

- When to use: Compliance-heavy workflows (e.g., Financial transactions, Medical triage). You cannot allow an agent to “decide” to skip the “Fraud Check” node.

- The Trade-off: Reduced autonomy for higher reliability.

Engineering Defense: Preventing System Collapse

Building the agents is easy; keeping them running is hard. Here are the technical implementations for stability.

Deadlock Prevention Strategies

In multi-agent systems, a deadlock occurs when Agent A waits for input from Agent B, while Agent B waits for Agent A.

- Resource Ordering: Enforce a strict hierarchy of resource acquisition. An agent must acquire the “Database Write Lock” before asking for the “API Token,” never the reverse.

- The “Poison Pill” Message: If an orchestration layer detects a cycle (using a Wait-For Graph algorithm), it injects a high-priority “TERMINATE” message to the lowest-priority agent involved, forcing it to crash and release its resources.

- Global Timeouts (TTL): Every inter-agent message must have a Time-To-Live. If a request isn’t fulfilled in $T$ seconds, the requesting agent defaults to a failure state rather than hanging indefinitely.

Task Arbitration Models

When multiple agents can perform a task, who should?

- Contract Net Protocol (CNP):

- Announcement: Orchestrator broadcasts: “I need a Python script written.”

- Bidding: Agent A (Coder) bids: “Confidence 0.9, Cost $0.02.” Agent B (Generalist) bids: “Confidence 0.6, Cost $0.01.”

- Award: Orchestrator awards task to Agent A based on a weighted utility function ($Confidence > Cost$).

- Priority Queues: Agents do not pull from a FIFO queue. They pull from a Priority Queue based on semantic urgency. “Security Alert” tasks bypass “Summarization” tasks.

Conflict Resolution Logic

When Agent A says “Buy” and Agent B says “Sell,” how does the system proceed?

- Model-Based Judge: A separate, highly capable LLM (the “Judge”) is fed the conflicting outputs and a “Constitution” prompt to render a final verdict.

- Voting Ensembles: Run the task $N$ times (or with $N$ agents). Simple majority rules. (Expensive but robust).

- Random Walk Avoidance: If agents are stuck in a negotiation loop for $>3$ turns (Thrashing), the system forces a random choice or escalates to a human human-in-the-loop.

Bounded Iteration Frameworks

Never allow while(true).

- Step limits: Hard cap on total graph transitions (e.g.,

max_steps=50). - Token Budgeting: The orchestrator tracks token usage across the session. If the session burns $5.00 without a

FINAL_ANSWERsignal, the circuit breaker trips. - Entropy Checks: If an agent’s output is semantically identical to its output 2 turns ago (measured by cosine similarity of embeddings), it is looping. Trigger a stop or a temperature increase to force divergence.

Leave a comment