The term agentic AI is everywhere. Chatbots are being renamed agents. Workflow tools are being marketed as autonomous systems. Even simple prompt chains are now described as “multi-agent architectures.”

But most of these systems are not agentic.

They are well-packaged language models executing instructions. True agentic systems are fundamentally different not because they are smarter, but because they behave differently.

Understanding this distinction matters, because agentic systems introduce new technical, operational, and governance risks that traditional AI systems do not.

The Core Confusion Around Agentic AI

An AI system does not become agentic simply because it:

- Uses a large language model

- Calls tools or APIs

- Runs across multiple steps

- Includes a planner or reasoning prompt

These are implementation details. They enable capability, but they do not create agency.

Agency is not about how many steps a system runs. It is about who decides what happens next.

A Practical Definition of an Agentic System

An AI system is agentic when it can:

Independently decide its next action based on goals and system state, without requiring explicit human instruction at every step.

This shift from execution to decision-making is what separates agents from traditional AI systems.

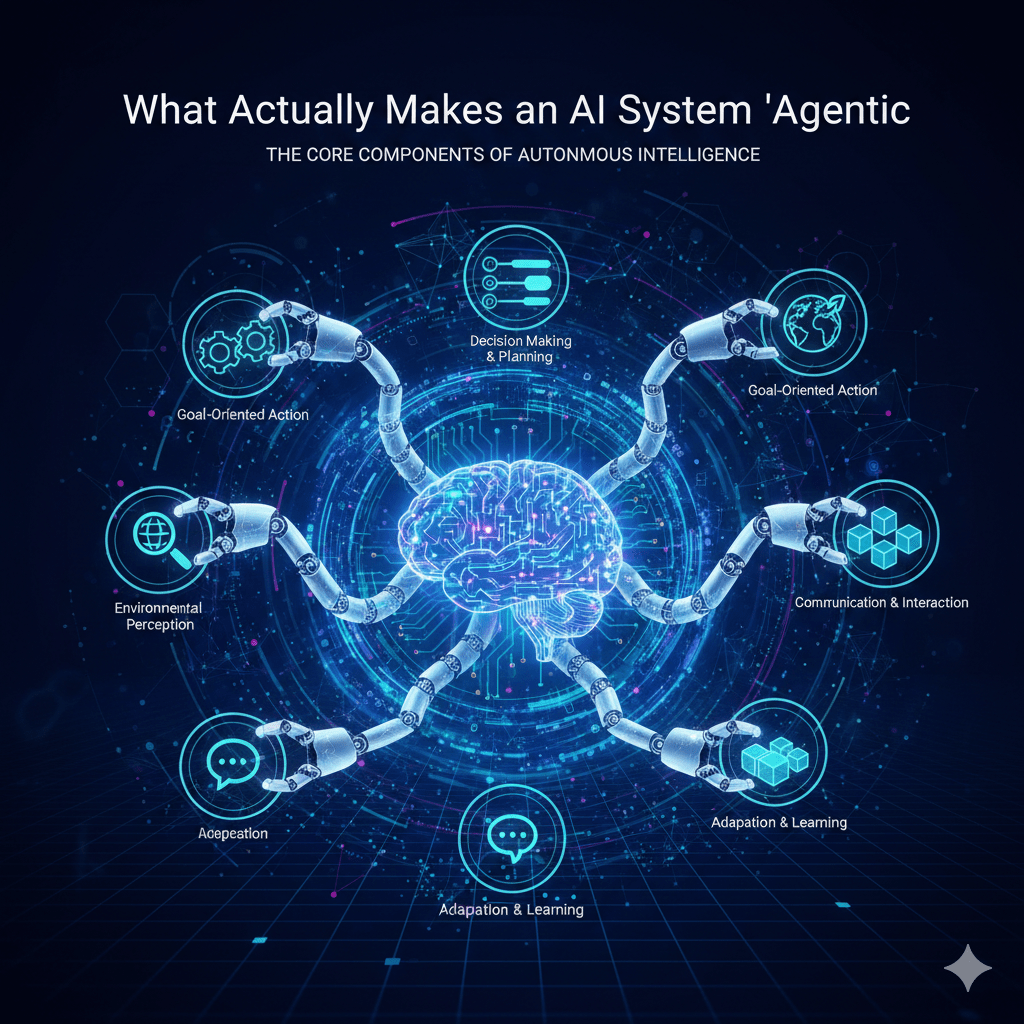

The Five Characteristics of a Truly Agentic System

1. Goal-Driven Behavior

Agentic systems operate toward a goal, not a single task.

A non-agent:

- Executes exactly what it is told

An agent:

- Evaluates progress toward an objective

- Adjusts actions when conditions change

- Decides whether to continue, pause, retry, or escalate

Without goal evaluation, there is no agency only scripted execution.

2. Persistent State Over Time

Agentic systems are stateful.

They maintain awareness of:

- Past actions

- Current context

- Outstanding work

- Environmental changes

Stateless LLM calls may appear intelligent, but they cannot support agency. Agents reason across time, not just across tokens.

3. Autonomous Decision-Making

An agent must be able to choose between multiple possible actions:

- Which tool to use

- Whether to request clarification

- When to escalate to a human

- Whether to retry or stop

If every decision path is hardcoded, the system is automation not an agent.

4. Ability to Act on the Environment

Agentic systems do more than generate text.

They:

- Update databases

- Trigger workflows

- Modify records

- Execute transactions

- Influence downstream systems

Crucially, these actions change the environment and the agent must then reason about the consequences of those changes.

5. Feedback and Self-Correction Loops

After acting, an agent evaluates outcomes:

- Was the action successful?

- Did it move closer to the goal?

- Should the strategy change?

Without feedback loops, systems appear autonomous but behave blindly. Feedback is what allows agentic systems to operate safely and adaptively in real-world environments.

What Is Commonly Mistaken for Agentic AI

Many systems are labeled agentic but lack true agency:

- Prompt chains

- Predefined workflows

- Retrieval-augmented generation (RAG) pipelines

- Copilots that wait for user input

- Multi-step automations with fixed decision logic

These systems can support agents, but they are not agents themselves.

Why This Distinction Matters

Mislabeling systems as agentic creates real risks:

- Organizations underestimate autonomy and oversight needs

- Accountability becomes unclear when decisions are made independently

- Failures are harder to trace and audit

- Governance is added too late, after incidents occur

Agentic systems don’t just scale intelligence they scale decision-making.

That changes how risk, responsibility, and control must be designed.

The Right Question to Ask

Instead of asking:

“Is this system an agent?”

Ask:

“What decisions does this system make without human approval?”

The more independent decisions it makes, the more agentic it is and the more carefully it must be governed.

Agentic AI is not defined by models, prompts, or tools. It is defined by autonomy within boundaries.

If a system can:

- Track goals

- Maintain state

- Choose actions

- Act on the world

- Learn from outcomes

It is agentic. If it cannot, it is a tool, no matter how sophisticated it looks. And in modern AI systems, that distinction is not semantic. It is foundational.

Leave a comment