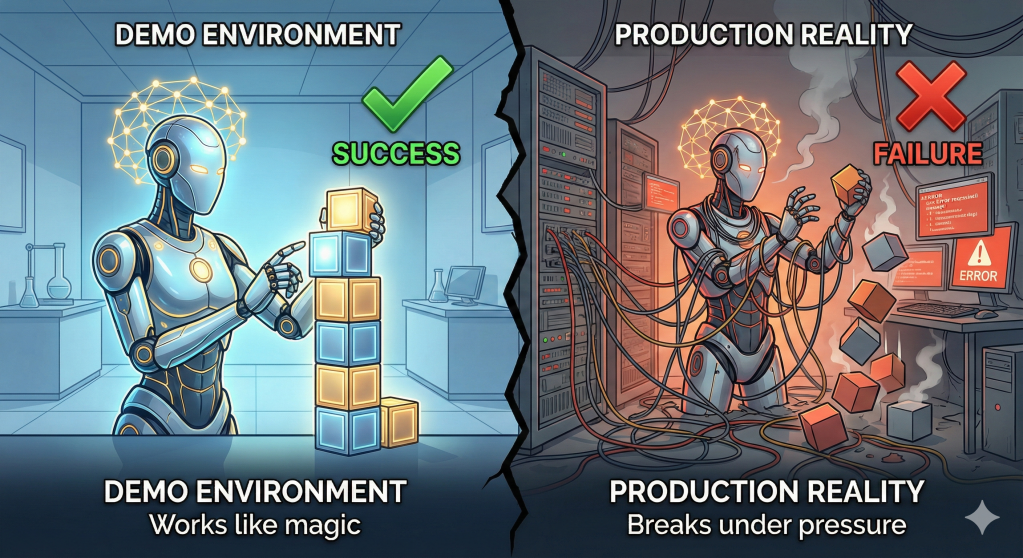

There is a “Demo Trap” in Agentic AI.

In a Jupyter notebook or a curated Twitter demo, agents look like magic. They research topics, write code, and book flights. But when these same architectures are lifted into production environments, they often crumble. The “works on my machine” phenomenon has never been more prevalent than in the world of autonomous agents.

Moving from a probabilistic chat interface to an agent that takes deterministic actions exposes a new class of failure modes that standard LLMOps pipelines aren’t built to handle.

Here is why most agents fail when they hit the real world, and the engineering shifts required to fix them.

The Three Failure Modes

1. The Stochastic Loop of Death

The most common failure in production agents is the infinite retry loop.

- The Scenario: An agent tries to call a tool (e.g.,

query_database). The tool returns an error (e.g., “Invalid Syntax”). The agent apologizes, tries to “fix” it, and sends the exact same malformed query again. - The Reality: LLMs are stubborn. Once they lock onto a reasoning path, they struggle to “backtrack” without explicit state management. In production, this looks like a single user request burning through $50 of API credits in 3 minutes because the agent is stuck in a

Thought -> Action -> Error -> Thoughtloop.

2. Hallucinated Parameters & Schema Drift

Agents break because they don’t respect rigid API contracts.

- The Scenario: Your API requires a date in

YYYY-MM-DDformat. The agent decides, just this once, to sendJanuary 1st, 2026. - The Reality: While function calling (tool use) capabilities have improved in models like GPT-4o, they are not infallible. Under high load or with complex context windows, agents often “forget” the strict constraints of the tools they are wielding, leading to downstream system crashes that look like application errors but are actually cognitive failures.

3. Context Pollution

As an agent works through a multi-step task, the context window fills up with “thoughts,” intermediate results, and tool outputs.

- The Scenario: By step 5 of a 10-step process, the prompt is so cluttered with the noise of previous steps that the model “forgets” the original user instruction.

- The Reality: “Attention” is not infinite. Performance degrades non-linearly as the context gets noisy. An agent that works perfectly for a 2-step task will often hallucinate wildly on step 8 simply because the signal-to-noise ratio in its memory has collapsed.

The Fix: From “Prompt Engineering” to “Flow Engineering”

We cannot prompt-engineer our way out of reliability issues. The industry is shifting toward Flow Engineering—architecting the system around the model, rather than just optimizing the model itself.

1. State Machines over Free-Form Loops

Stop building unconstrained while loops. The most robust agents in production today (using frameworks like LangGraph) treat agentic workflows as State Machines or Graphs.

- The Fix: Define explicit nodes and edges. If an agent fails a tool call twice, force a transition to a “Human Handoff” state or a “Fail Gracefully” state. Do not let the LLM decide when to give up; hard-code the exit criteria.

2. Deterministic Guardrails

Don’t trust the LLM to format your JSON.

- The Fix: Wrap every tool call in a validation layer (using libraries like Pydantic). If the agent generates parameters, validate them before they hit your API. If validation fails, return a structured error message to the agent specifically guiding it on why it failed, rather than a generic HTTP 500. This turns the error into a learning signal for the model within the session.

3. Observability is Not Optional

You cannot fix what you cannot trace. Standard logging (input/output) is useless for agents.

- The Fix: You need Trace-level Observability (using tools like LangSmith or Arize Phoenix). You must be able to see the internal monologue: What tool did it think about using? Why did it reject that path? Productionizing agents without deeply granular tracing is engineering malpractice.

The Verdict

The difference between a toy agent and a production agent is constraint.

The most successful “agents” in 2026 often look less like open-ended thinkers and more like flexible pipelines. They have freedom to reason within a step, but the path between steps is rigidly engineered. To build agents that survive production, we have to stop treating them like magic wands and start treating them like non-deterministic software components that require exception handling, timeouts, and strict typing.

Leave a comment