We are currently witnessing a massive shift in AI architecture: moving from Chatbots (which answer questions) to Agents (which execute tasks).

On the surface, this sounds like the Holy Grail of efficiency. Why hire a human analyst when an autonomous agent can browse the web, scrape data, analyze spreadsheets, and write a report 24/7?

But as we move from “Pilot” to “Production,” finance leaders are discovering a hidden landmine: The Unit Economics of Agency.

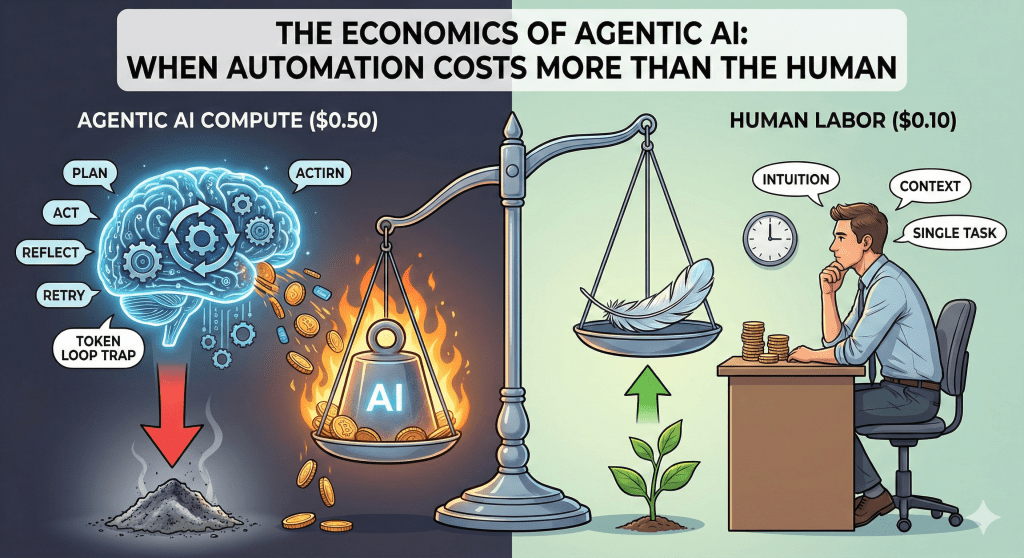

Generative AI was cheap when it was one prompt in, one answer out. Agentic AI is different. It is recursive. It thinks, it plans, it corrects itself. And every moment of “thinking” costs money.

Here is the uncomfortable truth: Right now, many organizations are spending $0.50 in compute to automate a task that costs a human $0.10 of time.

The Hidden Cost of “Thinking”

To understand the economics, you have to understand the architecture.

- Standard GenAI (RAG): User asks a question -> System retrieves data -> LLM answers.

- Agentic AI: User assigns a goal -> Agent creates a plan -> Agent executes Step 1 -> Agent reflects (“Did I do that right?”) -> Agent retries -> Agent executes Step 2 -> Agent hallucinates -> Agent self-corrects -> Agent finalizes.

We call this the “Token Loop.”

Agents don’t just generate text; they generate reasoning traces. To ensure reliability, developers build in “reflection steps” where the model critiques its own work. While this reduces errors, it exponentially increases the compute bill.

The New Equation: Compute Cost vs. Human Cognitive Cost

As a CFO or Data Leader, you need a new framework for approving AI use cases. You can no longer just ask “Can AI do this?” You must ask “Does the margin exist to let AI do this?”

The Agentic ROI Formula:

$$\text{Net Value} = (\text{Human Cost Saved}) – (\text{Agent Compute Cost} + \text{Error Correction Cost})$$

The Trap:

Let’s say you build an agent to schedule meetings.

- Human Cost: It takes a human 2 minutes to email three people. At a $60k salary ($0.50/min), that task costs $1.00.

- Agent Cost: The agent enters a loop. It checks calendars, drafts emails, reads replies, realizes a conflict, re-plans, and updates the invite. It uses a high-reasoning model (like GPT-4o or o1). It burns 50k tokens. The cost might be $0.80.

The Verdict: You saved 20 cents. Is the technical debt, maintenance, and risk of the agent hallucinating the time worth 20 cents? No.

The Opportunity:

Now, let’s look at Supply Chain Optimization.

- Human Cost: An analyst spends 4 hours cross-referencing shipping manifest PDFs against SAP data. Cost: $120.

- Agent Cost: An agent runs for 20 minutes, using massive “Chain of Thought” reasoning. It costs $15 in tokens.

- The Verdict: You saved $105 per transaction. Build this immediately.

3 Rules for Financially Viable Agents

If you are reviewing GenAI budgets for 2025, apply these three filters:

1. Ban “Low-Cognitive” Agents

Do not use expensive reasoning models for tasks that are basically “copy-paste.” If a task requires low reasoning (e.g., scheduling, basic data entry), use rigid code (RPA) or cheap “Flash” models. Do not burn GPU cycles on administrative trivia.

2. Demand “Model Routing”

Your architecture should not default to the smartest model. It should default to the cheapest model that can do the job. A well-architected agentic system uses a “Router” to send easy tasks to cheap models (GPT-4o-mini, Haiku) and only escalates to expensive “Thinker” models (o1, Claude 3.5 Sonnet) when complex reasoning is required.

3. Factor in the “Error Tax”

Agents fail. A lot. They get stuck in loops. They hallucinate. When calculating ROI, assume a 10-15% failure rate where a human has to step in and fix the mess. If the ROI creates a thin margin, the “Error Tax” will wipe it out.

Agentic AI is the future, but it is not free labor. It is digital labor, and like all labor, it has a wage.

The winners in the Agent Economy won’t just be the companies with the smartest bots. It will be the companies that treat Compute as a scarce resource, allocating it only where it generates exponential returns.

Strategic Question for the Board:

Are we automating tasks because they are high-value, or just because they are automatable?

Leave a comment