As enterprises accelerate their adoption of generative AI, one truth is becoming increasingly clear: no single model can deliver optimal performance across all tasks. The age of relying on one “foundation model” for everything is ending. What’s emerging instead is a new architectural pattern, LLM Mesh, powered by Model Routing Agents that intelligently select the best model for each job.

This marks a profound shift from monolithic AI to specialized, orchestration-driven AI ecosystems.

1. Why One Model Is No Longer Enough

Different models excel at different capabilities:

- Deep reasoning → Gemini / Claude

- Speed & cost efficiency → Llama / Qwen

- High creativity → Midjourney / Stable Diffusion

- Domain-specific precision → FinBERT, LegalBERT, Med-LLM

- Agentic execution → models optimized for tools & actions

Enterprises are discovering that using one model for everything leads to either high costs, lower quality, or both.

The natural evolution?

Let agents dynamically choose the right model for the right task, in real time.

2. What Are Model Routing Agents?

Model Routing Agents are autonomous controllers that analyse:

- The user request

- Task complexity

- Required reasoning depth

- Sensitivity of data

- Latency and cost constraints

- Compliance or policy requirements

…and then dispatch the task to the most suitable LLM or multi-model chain.

Think of them as:

The air-traffic controllers of enterprise AI.

They decide:

- Which model to call

- In what order

- At what temperature/context size

- With what constraints or guardrails

- When to escalate, retry, or fallback

This creates a flexible, high-performance AI capability that continuously optimises for cost, quality, and risk.

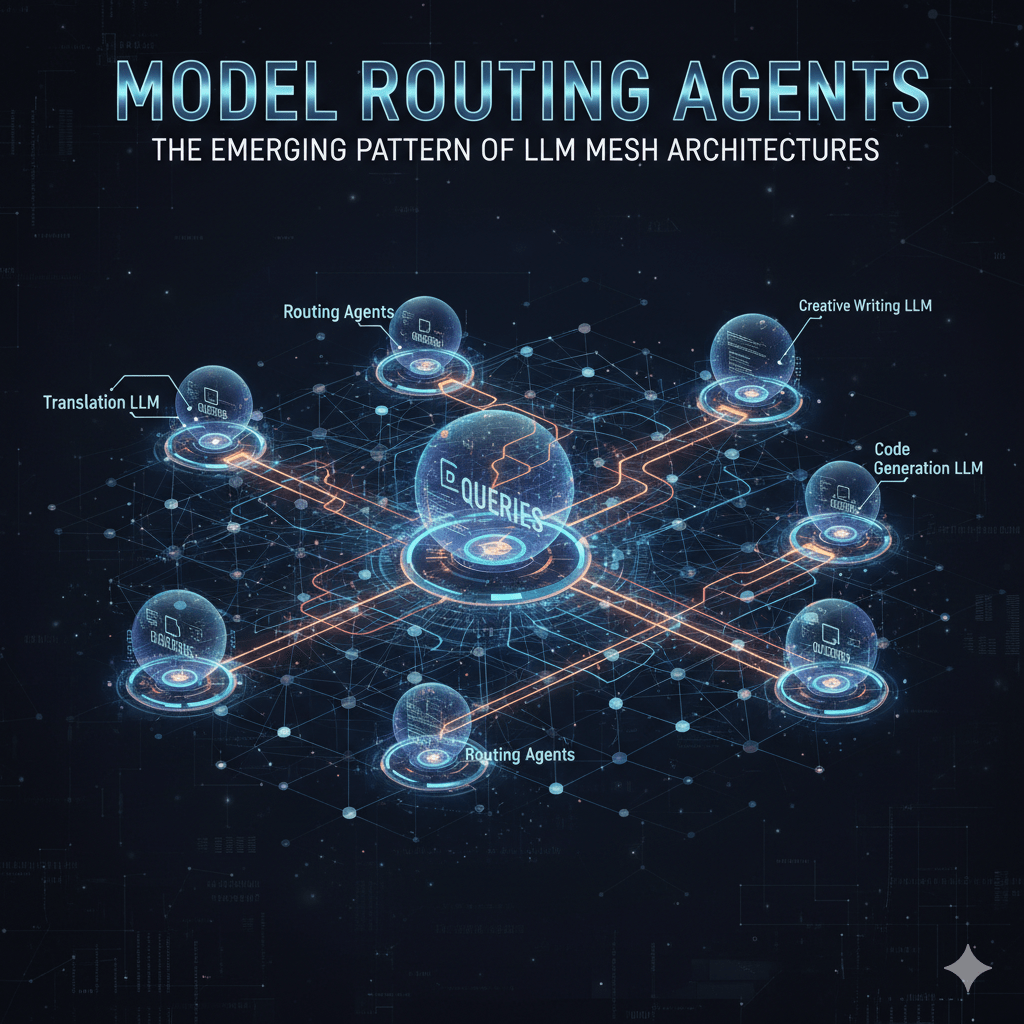

3. The LLM Mesh: A New Enterprise AI Architecture

The LLM Mesh is an interconnected ecosystem of models managed through routing agents.

Key Layers of an LLM Mesh Architecture:

- Intent Classifier Breaks down the user input into the underlying intent(s). Example: “Draft policy + calculate impact + summarise citations.”

- Routing Agent Selects the appropriate model(s) based on job type and constraints.

- Execution Layer The actual models execute their pieces:

- Governance Layer (Your strength)

- Aggregation Layer Combines outputs into a final coherent answer.

This is not a simple RAG pipeline, this is agentic orchestration across a heterogeneous model fleet.

4. Why Routing Agents Are Transformative

a. Better Quality Through Specialization

Using the best model for each task increases accuracy, quality, and depth.

b. Massive Cost Reduction

Expensive models handle only the tasks they’re best at. Routine summarization or drafting goes to lightweight models.

c. Reduced Hallucination Risk

Routing agents can implement cross-validation:

- Model A generates

- Model B checks

- Model C validates citations

This mirrors human peer review.

d. Built-in Redundancy and Reliability

If one model fails or produces unsafe output, agents automatically fallback to others.

e. Governance Alignment in Every Step

Every routing decision is logged, auditable, and policy-aware, satisfying FEAT, ISO 42001, and EU AI Act requirements.

5. Real-World Use Cases in Enterprises

Banking & Finance

- Gemini → complex risk reasoning

- FinBERT → regulatory text classification

- Llama → cost-efficient documentation

- Claude → audit logs & long-context reviews

Customer Operations

Agent routes:

- Intent → Llama

- Sentiment → OpenAI emotion model

- Resolution generation → Claude

- Policy validation → domain model

Compliance / Internal Audit

Routing based on sensitivity:

- On-prem secure model for PII

- Cloud model for generic analysis

- Double-agent validation before action

Software Engineering

- Code refactor → DeepSeek

- Test case generation → GPT-4.1

- Threat modelling → Security-LLM

- Dependency mapping → Graph models

6. Key Design Principles for Routing Agent Architectures

A. Policy-aware routing

Every routing choice must comply with:

- Data residency

- Model sensitivity

- Business rules

- Risk tiering

B. Multi-objective optimization

The agent must consider:

- Cost

- Latency

- Accuracy

- Risk

- Memory/context length

C. Tool-compatible execution

Routing agents must understand what tools a model can use:

- Browsing

- Retrieval

- Calculators

- Custom APIs

D. Observability of agent decisions

Logs must answer:

- Why did it choose Model X?

- What alternatives were considered?

- Did it escalate or fallback?

- Was the final answer validated?

7. The Future: The Enterprise AI “Model Mesh Fabric”

Within the next 3–5 years, enterprises will operate fleets of 20–50 models, each specialised.

What will bind them together is the AI Routing Mesh, with:

- Autonomous routing

- Distributed agent swarms

- Real-time policy evaluation

- Knowledge graph–augmented memory

- Event-driven triggers (Kafka)

- Dynamic risk tiering

- Compliance-by-design

This is the next evolution of GenAI, and it makes enterprises resilient, compliant, and cost-efficient.

Model Routing Agents represent the next big shift in enterprise AI architecture.

Just as cloud-native apps evolved from monoliths → microservices → service mesh, enterprise AI is evolving from single models → model clusters → LLM Mesh.

This architecture is:

- More scalable

- More compliant

- More cost-effective

- More aligned to real enterprise workflows

And most importantly, it unlocks an era where AI agents think, reason, choose, and act with the same judgment as an expert human operator.

Leave a comment