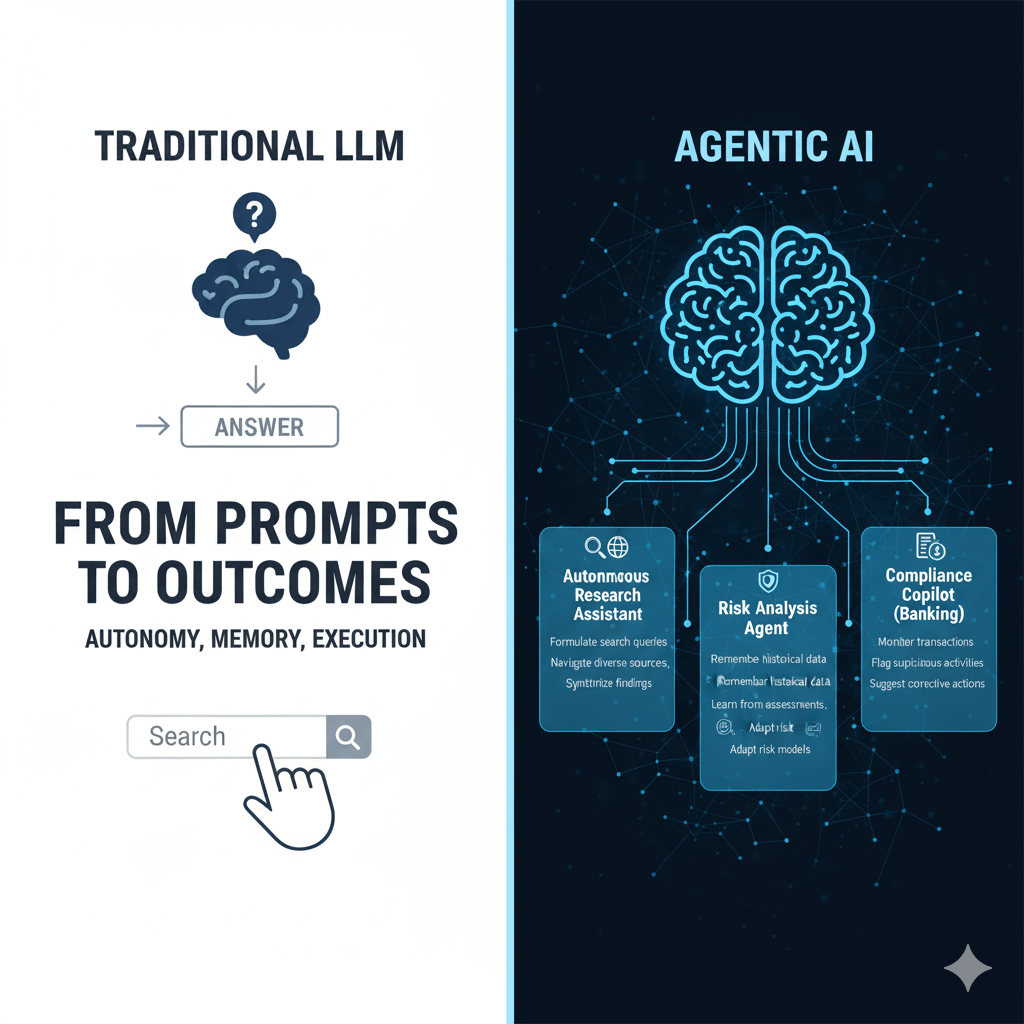

The landscape of Artificial Intelligence is rapidly evolving, moving beyond static models to dynamic, autonomous entities known as AI agents. While Large Language Models (LLMs) form the foundational “brain” of many of these agents, the key distinctions lie in their autonomy, memory, and goal-oriented execution.

Autonomy: Beyond Simple Prompts

Traditional LLMs are primarily reactive. They respond to a given prompt, generating text based on the patterns they’ve learned from vast datasets. Their “thinking” is confined to that single interaction. AI agents, on the other hand, are designed for autonomy. They can initiate actions, make decisions, and operate without constant human intervention. This independence allows them to tackle complex tasks that require multiple steps and adaptive strategies.

Imagine an autonomous research assistant. Instead of you prompting an LLM for each piece of information, an AI agent could:

- Formulate search queries based on a high-level research question.

- Navigate diverse information sources (databases, academic papers, web).

- Synthesize findings and identify gaps in knowledge.

- Even refine its own research questions as it learns more, all without direct human oversight for each step.

Memory: Learning and Adapting Over Time

While LLMs have a “context window” that allows them to remember parts of a recent conversation, their memory is ephemeral and limited. Each new interaction is largely a fresh start. AI agents, however, are built with more robust memory systems. They can store information, past experiences, and learned strategies over extended periods, allowing them to build a cumulative understanding of their environment and tasks.

Consider a risk analysis agent in banking. This agent wouldn’t just analyze a single transaction. It would:

- Remember historical data on fraudulent activities and risk patterns.

- Learn from previous risk assessments it conducted.

- Adapt its risk models based on new information and changing market conditions, continuously improving its accuracy.

Goal-Oriented Execution: Strategic Problem Solving

The ultimate differentiator is goal-oriented execution. LLMs generate text; they don’t inherently “do” anything in the real or digital world beyond that. AI agents are designed to achieve specific goals, breaking down complex objectives into actionable sub-tasks and executing them sequentially or in parallel. They can utilize tools, interact with APIs, and adapt their plans if obstacles arise.

A compliance copilot in banking exemplifies this. Its goal isn’t just to explain regulations, but to ensure adherence to them. It might:

- Monitor transactions for compliance breaches.

- Flag suspicious activities that deviate from regulatory guidelines.

- Generate reports for human review.

- Even suggest corrective actions based on a deep understanding of banking regulations and the bank’s internal policies.

In essence, AI agents combine the linguistic and reasoning power of LLMs with additional layers of autonomy, persistent memory, and strategic execution capabilities. This transformation moves AI from being a sophisticated tool for generating text to an active participant in problem-solving and task achievement, promising a future where AI systems can independently drive outcomes across various domains.

Leave a comment