Why MLOps, Model Governance, and Explainability Are Central to Responsible AI

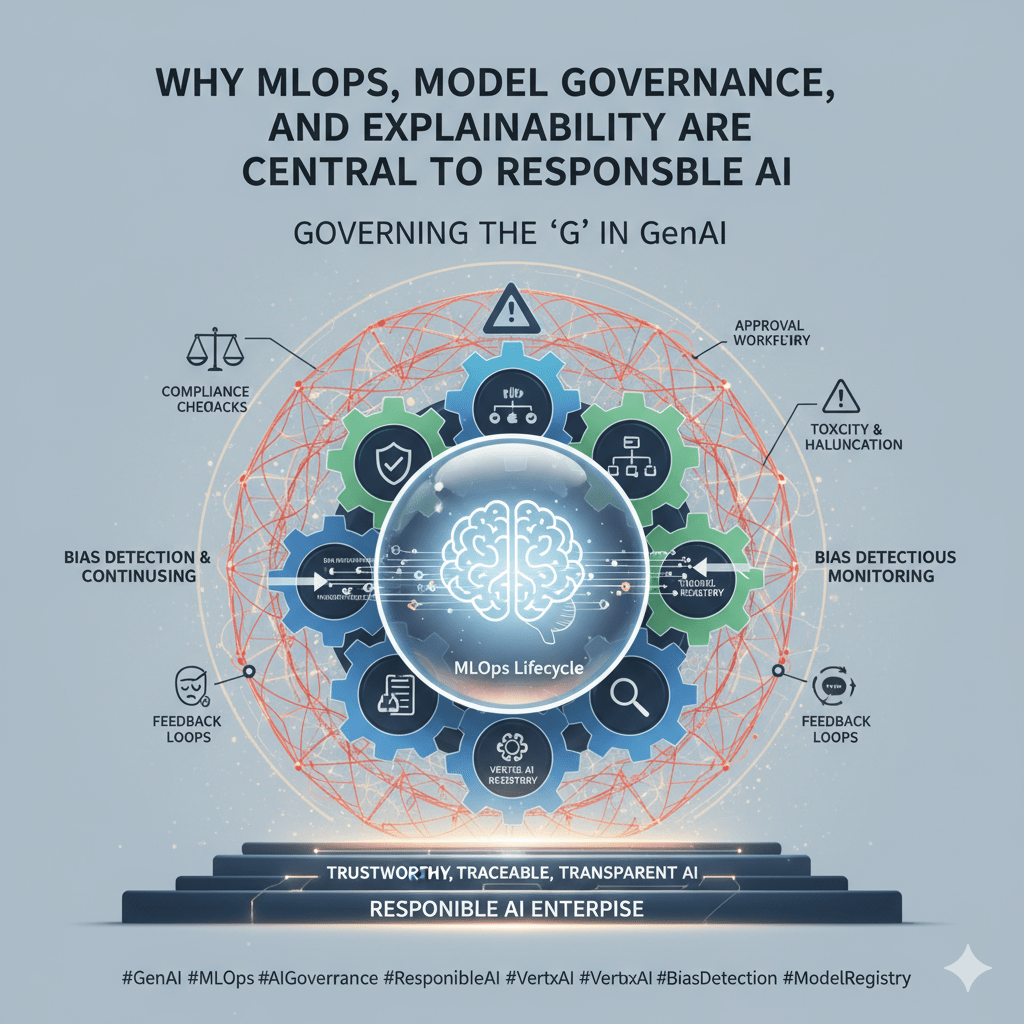

Generative AI (GenAI) has brought creativity and automation into enterprise workflows, but behind the scenes, the “G” in GenAI also stands for Governance. As organizations experiment with large language models and multimodal systems, ensuring they are governed, monitored, and explained becomes critical.

Today’s AI leaders must move beyond experimentation to build sustainable MLOps and governance pipelines that ensure GenAI models remain transparent, compliant, and bias-aware throughout their lifecycle.

1. From ModelOps to MLOps to GenAIOps

In the traditional ML world, MLOps focuses on automating the model lifecycle, from data ingestion and training to deployment and monitoring.

However, in the GenAI era, this extends further into GenAIOps, where human oversight, prompt governance, and model lineage are equally vital.

Organizations need to track:

- Which foundation models were fine-tuned.

- What datasets and prompt templates were used.

- Who approved model promotion to production.

This level of traceability ensures accountability, not just performance.

2. Governance with Vertex AI Model Registry

Platforms like Google Cloud Vertex AI are redefining how governance can be embedded directly into the model lifecycle.

The Vertex AI Model Registry acts as a central system of record — allowing teams to:

- Register, version, and compare models.

- Track metadata such as hyperparameters, datasets, and performance metrics.

- Define approval workflows for promotion to production.

- Integrate with CI/CD pipelines to enforce compliance checks before deployment.

By maintaining a single source of truth, enterprises can build confidence that every model, including GenAI variants, meets both performance and governance standards.

3. Bias Detection and Continuous Monitoring

Bias is not static, it evolves with data. For GenAI models that generate text, images, or decisions, continuous bias detection is essential.

Governance pipelines should integrate:

- Pre-deployment testing: using fairness and toxicity benchmarks.

- Post-deployment drift detection: monitoring responses for bias or hallucination.

- Feedback loops: allowing users to flag problematic outputs for retraining.

With tools like Vertex AI Evaluation and Explainable AI, teams can visualize model behavior, understand feature importance, and proactively address hidden biases before they scale.

4. Explainability: The Backbone of Trust

Explainability transforms AI from a black box into a glass box.

For models that impact financial decisions, healthcare outcomes, or customer interactions, understanding why a model generated a particular output is as important as what it produced.

Explainability layers, such as SHAP values, attention maps, and prompt traceability, empower governance teams to validate model rationale and ensure ethical compliance.

When explainability is built into the design phase, not added as an afterthought, it becomes a driver of trust and regulatory readiness.

5. Governance-by-Design for GenAI

Ultimately, governing the “G” in GenAI is about designing systems where governance isn’t a gate, but a guardrail.

Embedding governance-by-design ensures that innovation can move fast, but never unchecked.

As organizations adopt GenAI at scale, combining MLOps discipline, Vertex AI governance, bias detection, and explainability creates a blueprint for trustworthy, traceable, and transparent AI, the foundation of every responsible AI enterprise.

Leave a comment