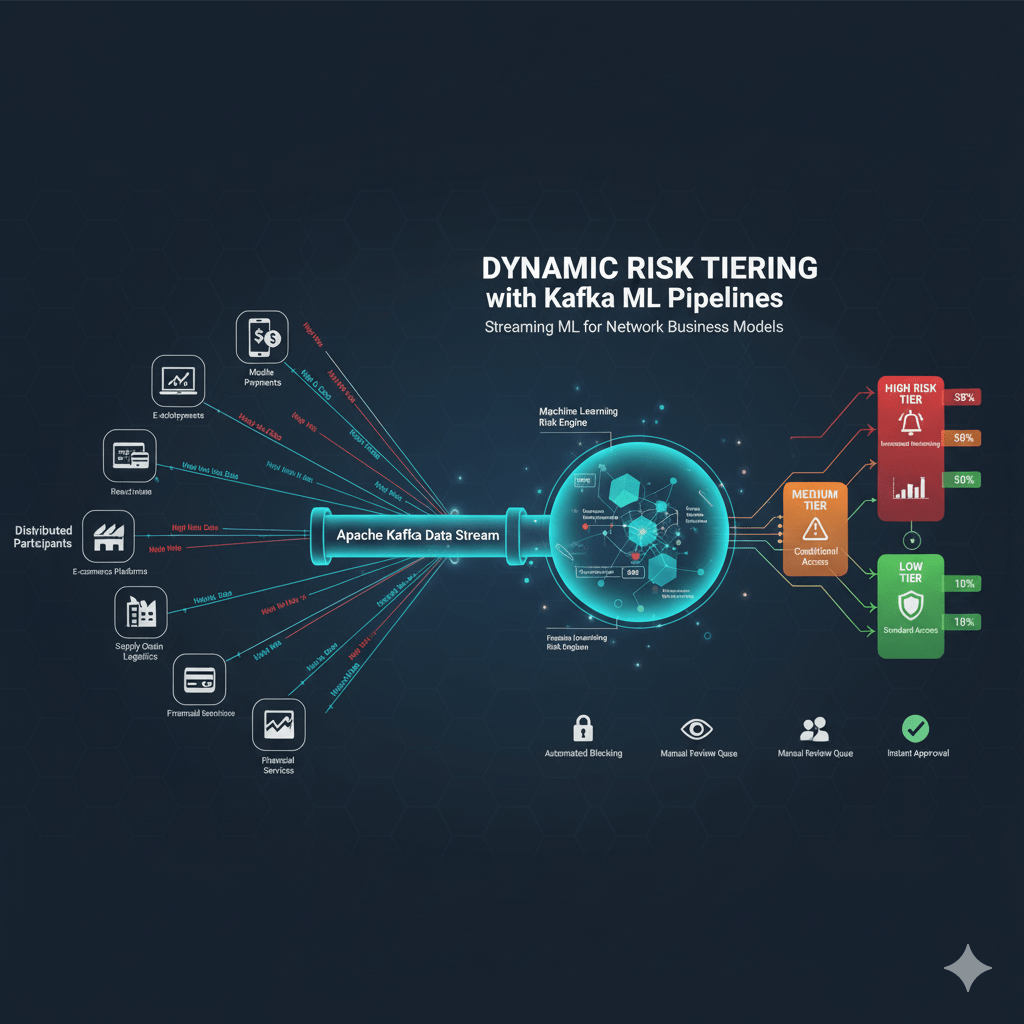

How Streaming ML Classifies and Manages Risk Across Distributed Participants in a Network Business Model

In today’s interconnected ecosystem, risk no longer resides within organizational boundaries, it flows across networks of customers, suppliers, platforms, and partners.

Whether it’s a banking network assessing credit exposure, or a logistics platform managing vendor reliability, risk is dynamic, evolving with every transaction, interaction, and event.

To stay ahead, enterprises are turning to real-time machine learning pipelines powered by Apache Kafka to continuously classify and tier risks across distributed participants in a network business model.

1. The Shift from Static to Dynamic Risk

Traditional risk scoring systems operate in batches, assessing exposure periodically based on static data snapshots.

But in a networked economy, risk propagation is instantaneous: a single vendor failure, market fluctuation, or compliance breach can ripple across multiple entities in seconds.

Dynamic risk tiering solves this by:

- Continuously ingesting real-time data streams.

- Applying ML-based anomaly detection and classification.

- Reassigning entities to low, medium, or high-risk tiers on the fly.

This enables proactive intervention instead of reactive damage control.

2. Kafka as the Real-Time Nervous System

At the heart of this transformation is Apache Kafka, the backbone of event-driven architecture for distributed systems.

Kafka enables:

- Streaming ingestion of transactional, behavioral, and third-party data across all nodes in the network.

- Decoupled pipelines where ML models subscribe to topics for risk scoring.

- Stateful processing using Kafka Streams or Flink to maintain contextual risk scores.

- Auditability and lineage, ensuring every model decision can be traced back to its source event.

By integrating Kafka with model registries and governance frameworks, organizations can build trustworthy, scalable risk engines that operate in real time.

3. ML Pipelines for Tiering and Classification

A typical dynamic risk-tiering pipeline includes:

- Feature Stream: Real-time data from transactions, interactions, and system logs.

- Inference Layer: ML models (e.g., ensemble classifiers, temporal graph networks) trained to predict risk levels.

- Decision Layer: Stream processors that assign or adjust risk tiers based on thresholds and model confidence.

- Feedback Loop: Continuous learning from downstream events such as defaults, fraud alerts, or manual overrides.

This streaming feedback loop ensures that risk tiers evolve as entities behave, enabling adaptive governance.

4. Applying It to Network Business Models

In a network business model, value is co-created across participants — but so is risk.

Kafka ML pipelines can power use cases such as:

- Banking ecosystems: Assessing SME creditworthiness dynamically across interconnected supply chains.

- Insurance networks: Adjusting risk exposure based on live telematics or customer activity.

- Digital marketplaces: Monitoring vendor reliability and fraud probability in real time.

- Payment networks: Detecting anomalous transaction patterns and flagging systemic risk early.

Dynamic tiering provides the risk visibility layer needed for trust and scalability in these models.

5. Governance and Explainability in Streaming Risk Systems

Streaming ML requires streaming governance.

Every real-time decision must be explainable, traceable, and compliant with regulatory expectations.

Integrating Kafka pipelines with:

- Model registries (e.g., Vertex AI Model Registry) for version control and lineage.

- Feature stores for reproducibility.

- Explainability layers for transparency in automated tiering decisions.

…ensures the system is not just intelligent, but accountable.

6. The Road Ahead: Autonomous Risk Management

As enterprises mature, these Kafka ML pipelines evolve into autonomous risk management systems, self-learning engines that balance exposure, detect contagion patterns, and enforce policy rules in real time.

Dynamic risk tiering represents the future of risk governance in motion, where Kafka becomes the circulatory system, ML the brain, and explainability the conscience.

Leave a comment