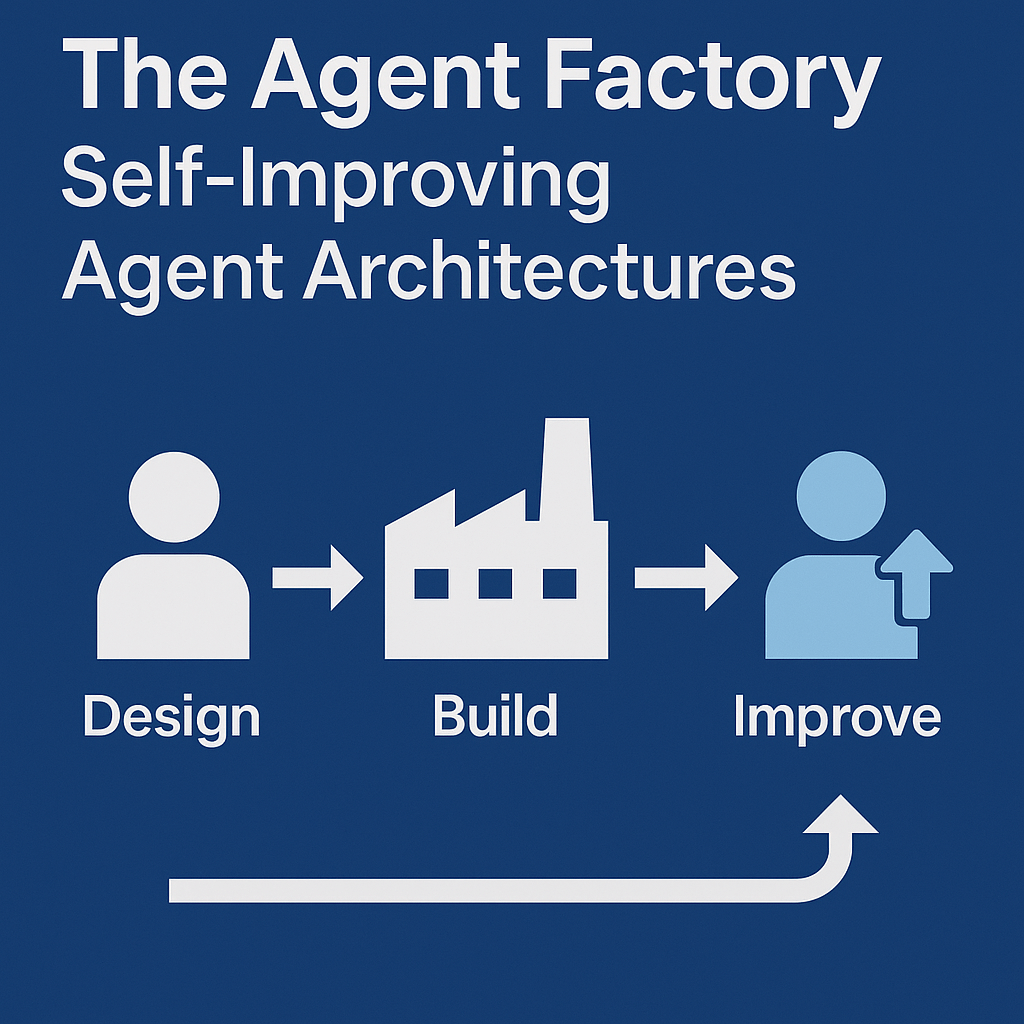

The next frontier in artificial intelligence is not just building smarter agents, it’s building agents that can build and improve themselves. Welcome to the world of the Agent Factory, where recursive self-improvement turns static AI models into dynamic, evolving problem-solvers.

From Static Models to Living Systems

Traditionally, AI agents are designed, trained, and deployed by humans. Once in production, they remain relatively static until researchers release a new version. But cutting-edge research is breaking this mold by creating architectures that allow agents to:

- Redesign themselves (modifying workflows, reasoning chains, or tool use),

- Train new agents with specialized skills, and

- Continuously evolve by integrating new data, feedback, and goals.

This turns AI from a fixed product into a living ecosystem of self-improving capabilities.

How Self-Improving Architectures Work

The concept is powered by three pillars:

- Recursive Self-Improvement

Agents don’t just execute tasks, they analyze their own performance, identify weaknesses, and modify their strategies. This mirrors the scientific method: experiment, observe, adjust. - Agent Factories & Frameworks

Frameworks like AutoGen, LangChain, and OpenAI Swarm are laying the groundwork for “meta-agents” that coordinate other agents. These act as factories, spawning, training, and refining task-specific agents on demand. - Dynamic Evolution

Instead of waiting for large model retraining cycles, self-improving agents evolve continuously. They add tools, expand memory, and adapt reasoning to new domains, becoming more general over time.

Why This Matters

The potential here is transformative:

- Adaptability: Agents evolve in real time as problems change.

- Efficiency: Human intervention is reduced—agents optimize themselves.

- Innovation: New problem-solving strategies emerge that weren’t explicitly programmed.

Imagine an agent in finance that learns new fraud patterns overnight, or a healthcare agent that designs a new diagnostic pipeline after reviewing research papers.

The Road Ahead

While the vision is powerful, challenges remain:

- Safety: Ensuring recursive improvement doesn’t lead to unintended behaviors.

- Governance: Setting boundaries for self-directed learning.

- Evaluation: Measuring progress when agents evolve beyond initial benchmarks.

Researchers are already experimenting with guardrails-as-code, risk-tiering frameworks, and human-in-the-loop checkpoints to ensure safe evolution.

👉 The Agent Factory is more than an idea, it’s a blueprint for the future of AI. Instead of static assistants, we’re moving toward adaptive, self-improving systems that can design, test, and evolve themselves. In the years ahead, the question won’t just be “What can an agent do?” it will be “What can it become?”

Leave a comment