As machine learning pipelines become more complex, monolithic models are being replaced by modular, distributed agents—each with a specific role. From data collectors to model predictors, explainability agents to validators, these components need to work in concert. The challenge? Ensuring real-time coordination, traceability, and resilience.

This is where Apache Kafka shines—not just as a messaging backbone, but as the orchestration fabric that holds multi-agent machine learning systems together.

🎯 What Are Multi-Agent ML Workflows?

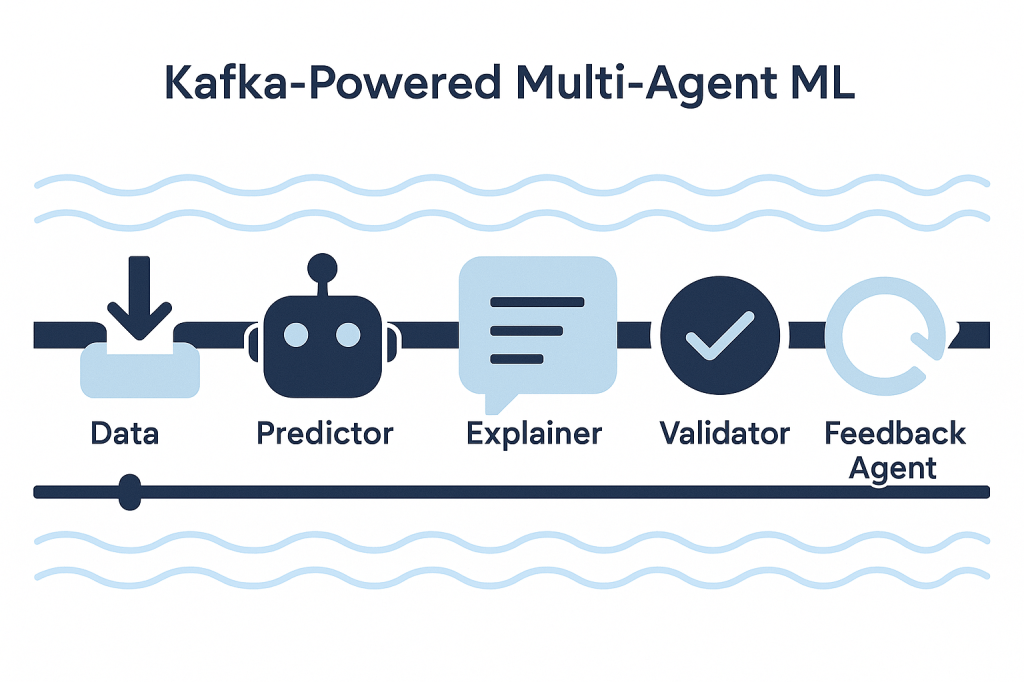

A multi-agent ML workflow splits responsibilities among specialized components or “agents”:

- Data Collector: Ingests and preprocesses raw data.

- Model Selector: Chooses the right model based on the context or metadata.

- Predictor: Runs inference and returns output.

- Explainer: Generates interpretability insights.

- Validator: Performs checks for drift, fairness, or confidence thresholds.

- Feedback Agent: Captures human or system feedback for retraining.

These agents need to communicate in real time—asynchronously, at scale, and with decoupling—making Kafka a perfect fit.

🧩 Kafka as the Messaging Layer

Kafka’s publish-subscribe model provides:

- Loose Coupling: Agents don’t need to know about each other directly.

- Scalability: Kafka handles millions of messages per second with fault tolerance.

- Replayability: Events can be reprocessed for audits or retraining.

- Latency Management: Stream processing ensures near real-time responses.

Each agent consumes and produces to specific Kafka topics, forming a flow:

[data.raw] → DataCollector → [data.cleaned]

[data.cleaned] → ModelSelector → [model.request]

[model.request] → Predictor → [model.output]

[model.output] → Explainer → [model.explained]

[model.output, model.explained] → Validator → [validated.results]

[validated.results] → FeedbackAgent → [training.feedback]

🔧 Example Use Case: Real-Time Credit Scoring

Imagine a real-time credit scoring system:

- DataCollector reads from

[user.transaction]. - ModelSelector evaluates features to pick a risk model (e.g., SME vs. consumer).

- Predictor scores the credit risk.

- Explainer adds human-readable rationale.

- Validator confirms thresholds and compliance.

- FeedbackAgent stores outcomes to Kafka for periodic model updates.

Each stage is decoupled, versionable, and can scale independently—thanks to Kafka.

🚀 Benefits of Kafka-Orchestrated Multi-Agent Pipelines

- Modularity: Swap out agents without breaking the pipeline.

- Observability: Tap into topics to analyze bottlenecks or failures.

- Resilience: Replay from any point in case of crash or drift detection.

- Upgradability: Test new agents in shadow mode before full deployment.

🛠️ Tools to Use with Kafka

- Apache Flink or Kafka Streams: For in-flight feature computation.

- MLflow / Kubeflow: For managing agent lifecycle and experiments.

- OpenLineage: For tracking data flow and dependencies.

- Protobuf/Avro: For schema evolution and strong typing between agents.

In a world where AI agents are becoming composable microservices, Kafka provides the communication glue. By orchestrating your ML agents over Kafka, you enable agility, traceability, and scalability—core pillars of any production-grade AI system.

The future of machine learning isn’t a single monolith. It’s a collaborative agent ecosystem—and Kafka is the message bus that powers it.

Leave a comment